Understanding Server Response Time and Cache Strategies

Server response time refers to the amount of time it takes for a server to respond to a request. Optimising response times is crucial for delivering good user experience on websites and web applications. Slow response times lead to poor engagement and higher abandonment rates. In this article, we dive deeper into what impacts server response times and strategies to improve them.

Components of Server Response Time

There are two primary components that make up server response time:

1. Server Processing Time: This is the amount of time a server needs to process a request and generate a response. It involves executing application logic, retrieving data from databases, rendering HTML, image processing, PDF generation etc. The processing time depends on the application architecture, hosting infrastructure, database queries, code efficiency and other factors. Complex applications and unoptimised code result in slower processing times.

2. Network Latency: This refers to the time required for the request and response data to traverse the network between client and server. It is impacted by the geographical distance between the user and server as well as bandwidth capacity along the route. Network latency depends on physical constraints and is often one of the hardest factors to optimise.

Importance of Fast Response Times

Improving server response time offers a multitude of benefits that extend beyond just technical optimisation. Let’s delve deeper into each of these advantages:

1. Better User Experience:

- Perception of Speed: Users tend to perceive faster-loading websites as more reliable and professional. A swift server response time creates a positive initial impression and instil confidence in the website’s performance.

- Reduced Bounce Rates: Faster websites experience lower bounce rates as users are more likely to stay and explore when they encounter responsive and quickly loading pages.

2. Higher Conversion Rates:

- Critical for E-commerce: In the competitive landscape of e-commerce, every millisecond counts. Studies have shown that even a minor delay in page loading can significantly impact conversion rates. A delay of just 100 milliseconds can lead to a 1% reduction in conversions, highlighting the critical role of server response time in driving sales and revenue.

- Improved Checkout Experience: A fast server response time during the checkout process instil confidence in users and reduces the likelihood of cart abandonment, ultimately leading to higher conversion rates and increased revenue.

3. Stronger Engagement:

- Encourages Interaction: Quick and responsive applications encourage users to engage more actively with the content. Whether it’s browsing through products, reading articles, or interacting with multimedia elements, faster response times promote a smoother and more enjoyable user experience, fostering greater engagement and interaction.

4. Increased Productivity:

- Enhanced Workflow Efficiency: Faster server response times translate to quicker loading times for internal applications and tools used by employees. This efficiency boost enables employees to complete tasks more rapidly, leading to increased productivity and smoother workflow operations.

- Reduced Frustration: Employees waste less time waiting for applications to respond, leading to reduced frustration and a more positive work environment. This improved efficiency ultimately contributes to higher overall productivity levels within the organisation.

5. Reduced IT Costs:

- Optimised Resource Utilisation: Faster applications require fewer server resources to handle the same volume of requests. By optimising server response time, organisations can streamline resource allocation and achieve higher levels of efficiency without the need for additional hardware or infrastructure investments.

- Lower Maintenance Overheads: With fewer resources dedicated to managing and maintaining server infrastructure, organisations can realise cost savings in terms of IT personnel, hardware maintenance, and operational expenses.

In essence, improving server response time transcends mere technical optimisation—it directly impacts user satisfaction, revenue generation, employee productivity, and overall operational efficiency. By prioritising performance optimisation and implementing effective strategies, organisations can reap the manifold benefits of faster, more responsive applications, thereby gaining a competitive edge in today’s digital landscape.

Strategies to Optimise Response Time

Optimising response times is paramount in ensuring a smooth and efficient user experience for web applications. Let’s delve into each key strategy to understand how they contribute to improving response times:

1. Code Optimisation:

- Refactoring Inefficient Code: Identify and refactor code segments that are computationally expensive or inefficient, optimising algorithms and improving overall execution speed.

- Database Query Optimisation: Fine-tune database queries by adding indexes, reducing unnecessary joins, and minimising data retrieval overhead to expedite data access.

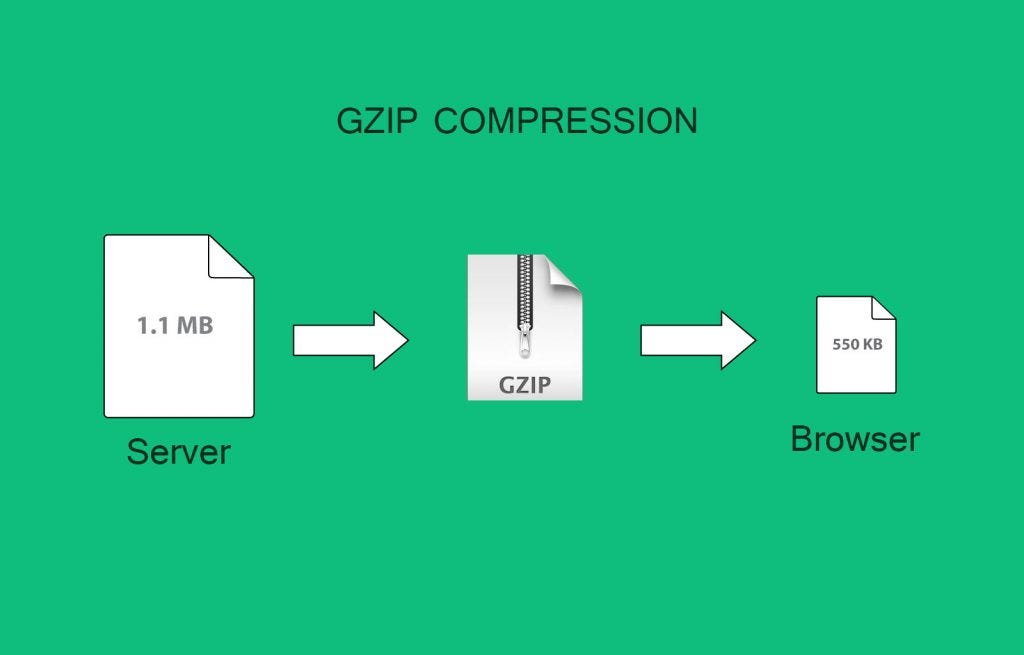

- Payload Compression: Compressing payload sizes, such as JSON responses or HTML documents, using techniques like gzip compression reduces bandwidth usage and speeds up data transmission.

2. Content Delivery Networks (CDN):

- Proximity to Users: CDNs distribute static assets such as images, CSS, and JavaScript files across geographically dispersed servers, bringing content closer to users and reducing latency.

- Caching Mechanisms: CDNs employ caching mechanisms to store frequently accessed content at edge locations, further enhancing response times by serving content directly from the nearest edge server.

3. Caching and Buffering:

- In-Memory Caching: Cache frequently accessed data, responses, and templates in memory to avoid repeating work and expedite subsequent requests.

- Response Caching: Cache dynamic content at the server-side or proxy level to minimise processing overhead and decrease response times for recurring requests.

4. Load Balancing:

- Even Distribution of Traffic: Load balancers distribute incoming requests across multiple backend servers, preventing any single server from becoming overwhelmed and ensuring optimal resource utilisation.

- Horizontal Scaling: Scaling out by adding more servers horizontally allows for increased capacity and better handling of peak traffic loads, further enhancing response times.

5. Asynchronous Processing:

- Queue-Based Architecture: Implement queues and background workers to handle time-consuming tasks asynchronously, freeing up the main application thread to respond to user requests promptly.

- Improved Scalability: Asynchronous processing enables better scalability and responsiveness by decoupling resource-intensive tasks from the main request-handling flow.

6. Compression:

- Gzip Compression: Compressing HTTP responses using gzip or similar algorithms reduces payload sizes, minimising data transfer time and improving response times, particularly for text-based content.

7. Removing Unnecessary Functionality:

- Simplification: Streamline applications by eliminating unused features, dependencies, and code segments that add unnecessary complexity and overhead.

- Leaner Codebase: A leaner codebase translates to faster execution times, reduced memory footprint, and improved overall performance.

By incorporating these key strategies into web application development and optimisation efforts, developers can significantly enhance response times, resulting in a more responsive, efficient, and user-friendly online experience.

Caching

Caching plays a pivotal role in augmenting website performance by storing frequently accessed data and serving it swiftly to users. Effective cache strategies mitigate server load and expedite content delivery.

The Importance of Caching

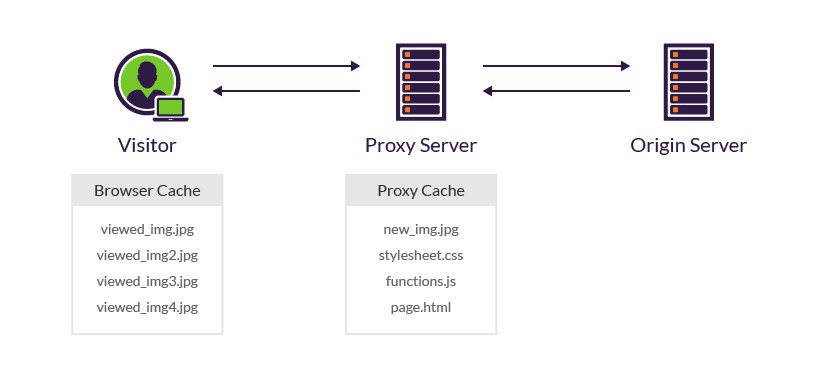

Caching is one of the most effective strategies for optimising server response times. By storing frequently accessed data in a fast storage location closer to users, sites can reduce repeated backend work and avoid round-trip delays to the origin server. The two main types of caching are browser caching and server-side caching.

Browser Caching

Browser caching stores static assets like images, CSS files, Javascript and HTML pages in the browser’s cache on the user’s local computer. Instead of having to retrieve these assets from the remote server each time, the browser loads them from the local cache which is much faster. Browser caching works great for static resources that don’t change often.

Some best practices for leveraging browser caching include:

– Setting far future Expires or Cache-Control max-age headers so assets are cached for longer periods.

– Fingerprinting asset filenames with their hash so updated files get new cache-busted names.

– Serving assets from a cookieless domain so caching is not inhibited by cookie headers.

– Optimising cache sizes and periods for different asset types based on update frequency.

Server-Side Caching

Server-side caching, facilitated by fast in-memory stores like Memcached or Redis, plays a crucial role in improving application performance by reducing latency and minimising redundant processing. Here are some guidelines for effectively implementing server-side caching:

1. Cache Database Query Results and API Call Responses:

- Avoiding Redundant Queries: Cache the results of frequently executed database queries and responses from external API calls to eliminate the need for repetitive and resource-intensive data retrieval operations.

- Consider Data Volatility: Prioritise caching data that is relatively stable and changes infrequently to maximise cache efficiency and effectiveness.

2. Cache Rendered Views, Fragments, and Page Markup:

- Prevent Repetitive Rendering: Cache the rendered views, fragments, and page markup generated by the application to avoid recomputation for every page load.

- Granular Caching: Cache specific sections or components of the page that are reusable across multiple requests to optimise caching efficiency.

3. Implement Cache-Aside Pattern:

- Efficient Cache Lookup: Adopt the cache-aside pattern, where the application first checks the cache for the requested data before querying the database.

- Fetch-on-Miss: If the data is not found in the cache, fetch it from the database or external service and store it in the cache for subsequent requests.

4. Expire and Invalidate Cache on Data Changes:

- Maintain Data Consistency: Define cache eviction policies and utilise events or triggers to expire and invalidate cache entries when underlying data changes occur.

- Avoid Stale Data: Ensure that cached data remains up-to-date to prevent serving stale or outdated information to users, which could lead to inconsistencies and erroneous application behaviour.

5. Monitor for Stale or Outdated Cached Data:

- Regular Cache Inspection: Implement mechanisms to monitor the freshness of cached data and detect instances of staleness or outdatedness.

- Automated Alerts: Set up automated alerts or notifications to prompt cache invalidation or data refresh when anomalies or discrepancies are detected.

Effective server-side caching strategies are instrumental in enhancing application performance, scalability, and responsiveness. By caching database query results, API responses, rendered views, and page markup, developers can minimise latency and optimise resource utilisation. However, it’s essential to implement cache invalidation mechanisms, adhere to caching best practices, and remain vigilant against the risks of serving stale or outdated cached data. With careful planning and implementation, server-side caching can significantly improve the user experience and overall performance of dynamic web applications.

Cache Invalidation

Cache invalidation poses a significant challenge in caching strategies as it involves ensuring that cached data remains up-to-date and consistent with changes in the source data. Let’s delve into each cache invalidation strategy in detail:

1. Set TTL (Time-To-Live) Based Expiration:

- Forced Data Refresh: By setting a fixed time period for the TTL, cached data is automatically invalidated and re-fetched from the source after the expiration time elapses.

- Trade-off between Freshness and Performance: Shorter TTLs ensure fresher data but may lead to increased load on the server due to frequent re-fetching, while longer TTLs optimise performance but risk serving stale data.

2. Use Events, Webhooks, or Signals:

- Real-time Invalidation: Implement mechanisms such as events, webhooks, or signals to actively trigger cache invalidation whenever relevant data updates occur.

- Ensuring Consistency: This approach ensures that cached data is promptly invalidated and refreshed upon changes in the source data, maintaining consistency between the cache and the data source.

3. Version Identifiers in Cache Keys:

- Efficient Cache Invalidation: Embed version identifiers in cache keys or metadata to facilitate easy identification and invalidation of outdated cache entries.

- Flush on Deployment: Upon deploying updates or new versions of the application, increment the version identifier to invalidate all cached entries associated with the previous version, ensuring a clean cache state.

4. Segment Cache by Categories, Regions, or User Groups:

- Granular Control: Partition the cache based on categories, regions, or user groups to enable more targeted and granular cache invalidation.

- Fine-tuned Expiration Policies: Apply different expiration policies to each segment based on its volatility and importance, allowing for optimised cache management.

Challenges and Considerations:

- Overhead: Implementing cache invalidation mechanisms adds overhead in terms of processing and resource utilisation, which must be carefully managed to avoid performance degradation.

- Consistency vs. Performance: Striking the right balance between maintaining data consistency and optimising performance is crucial, as overly aggressive cache invalidation may lead to increased latency and server load.

- Complexity: Managing cache invalidation logic and ensuring its correctness across distributed systems or microservices architectures can be complex and challenging.

Effective cache invalidation strategies are essential for maintaining data consistency and ensuring that cached data remains relevant and up-to-date. By leveraging techniques such as TTL-based expiration, real-time invalidation mechanisms, version identifiers, and segmented caching, developers can mitigate the risk of serving stale data from the cache while optimising performance and resource utilisation. However, it’s imperative to carefully evaluate the trade-offs and complexities associated with each strategy to strike the right balance between consistency, performance, and scalability in caching implementations.

Finding the right caching techniques requires thorough testing and monitoring. Measure cache hit ratios, expiration patterns and age of cached data when tuning for optimal performance. Implement multiple layers of caching across browsers, servers, databases and CDNs for compounding benefits. With smart caching systems in place, sites can easily handle surges in traffic without expensive infrastructure scaling.

In conclusion, understanding server response time and implementing effective cache strategies are indispensable pursuits in the realm of web development. By optimising server response time and employing judicious cache mechanisms, developers can enhance website performance, bolster user experience, and bolster the competitive edge of online ventures. Embracing a holistic approach to performance optimisation ensures that websites deliver content swiftly and seamlessly, thereby fostering user satisfaction and driving business success in the digital domain.