A/B Testing Essentials: The Power of Experimentation in CRO

A/B testing stands as an indispensable tool for businesses striving to enhance their online performance. By systematically comparing two or more variants of a webpage or app element, A/B testing allows businesses to make data-driven decisions, optimising their digital assets for maximum conversions and user engagement. In this article, we delve into the essentials of A/B testing, uncovering its transformative power in CRO strategies.

Understanding A/B Testing:

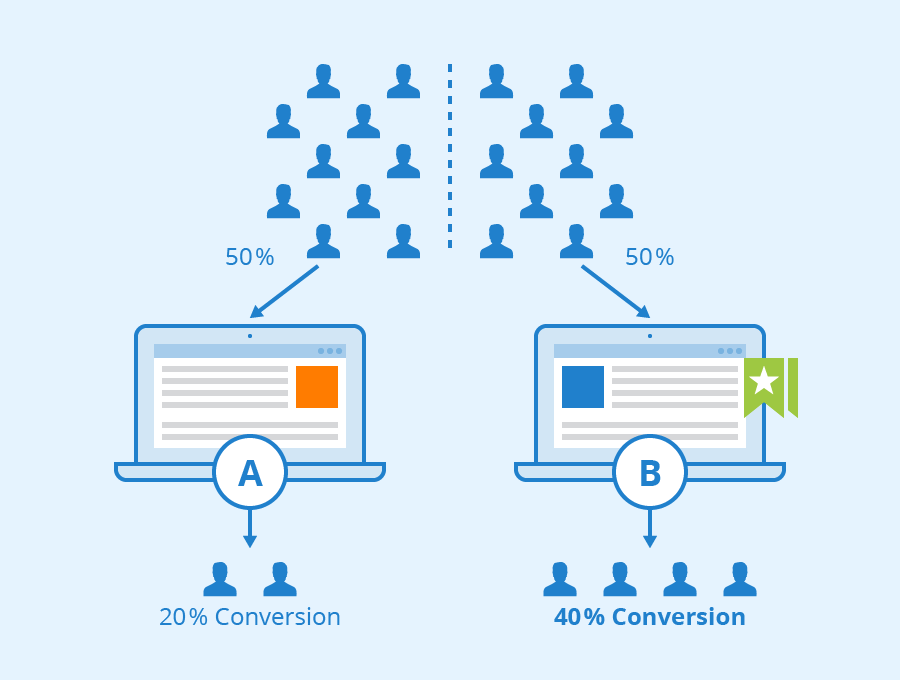

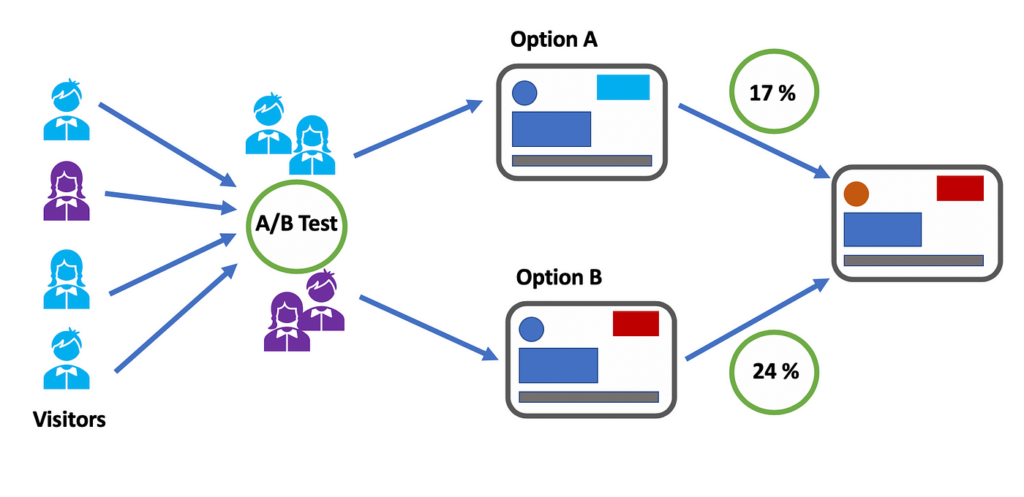

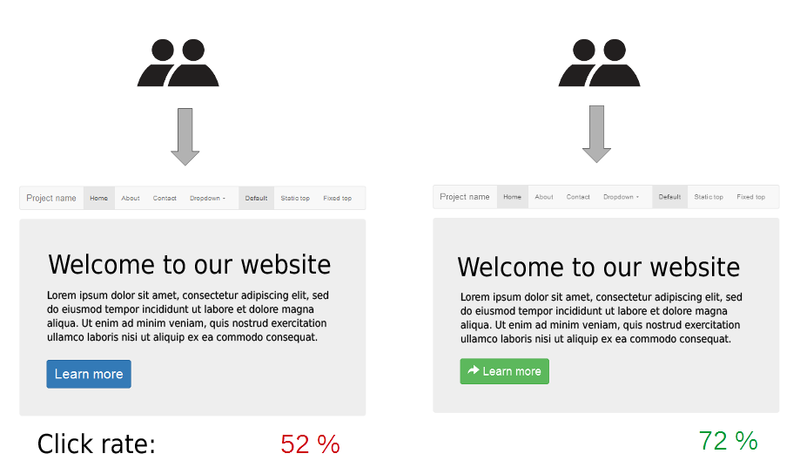

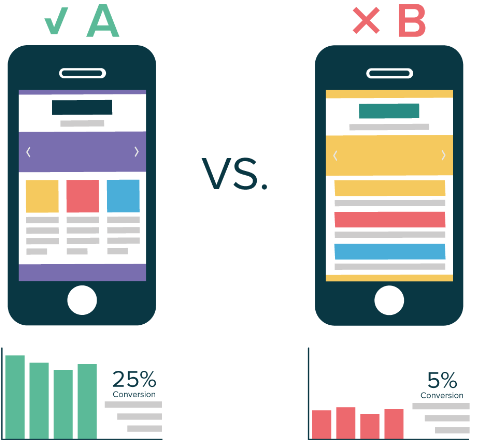

A/B testing, also known as split testing, is a methodological approach used to compare two or more variations of a webpage or element to determine which one performs better in terms of predefined metrics, such as conversion rates, click-through rates, or bounce rates. By presenting different versions of a webpage to users randomly, A/B testing helps businesses identify the most effective design, content, or functionality to achieve their objectives.

Importance of A/B Testing in CRO:

1. Data-Driven Decision Making:

A/B testing provides invaluable insights into user behaviour and preferences by analysing quantitative data. Instead of relying on assumptions or guesswork, marketers can base their decisions on concrete evidence gathered through A/B tests. This data-driven approach ensures that optimisation efforts are targeted and effective, leading to tangible improvements in conversion rates.

2. Continuous Improvement:

CRO is an ongoing process aimed at refining and optimising various aspects of a website or app to maximise conversions. A/B testing facilitates this iterative approach by enabling marketers to continuously test and tweak different elements, such as headlines, calls-to-action, layouts, and visuals. By systematically refining these components based on test results, businesses can achieve incremental gains in conversion rates over time.

3. Enhanced User Experience:

Optimising for conversions often goes hand in hand with improving the overall user experience (UX). A/B testing allows marketers to experiment with different design elements, navigation paths, and content formats to identify the most user-friendly configurations. By prioritising user preferences and behaviours, businesses can create a seamless and intuitive experience that encourages visitors to take desired actions.

4. Mitigating Risk:

Launching major website or app changes without proper testing can carry significant risks, including potential decreases in conversion rates or user satisfaction. A/B testing mitigates these risks by allowing marketers to validate hypotheses and changes on a smaller scale before implementing them universally. This incremental approach minimises the likelihood of negative impacts while maximising the potential for positive outcomes.

Key Metrics for A/B Testing Success: Defining Objectives and KPIs

Before embarking on an A/B testing campaign, it’s crucial to establish clear objectives and key performance indicators (KPIs) that align with your conversion goals. Whether your aim is to increase purchases, newsletter sign-ups, or form submissions, defining measurable objectives provides focus and direction for your testing strategy.

Common metrics used to evaluate A/B test results include conversion rate, click-through rate, bounce rate, and revenue per visitor. By tracking these metrics meticulously and comparing them between variations A and B, marketers can gauge the effectiveness of their experiments and make informed optimisation decisions.

Essential Strategies for A/B Testing Success:

1. Clearly Define Objectives:

Before conducting A/B tests, it’s crucial to clearly define the objectives and key metrics that align with your conversion goals. Whether you’re aiming to increase purchases, newsletter sign-ups, or form submissions, establishing measurable objectives will guide your testing strategy and help you evaluate results effectively.

2. Focus on One Variable at a Time:

To isolate the impact of specific changes and draw accurate conclusions from A/B tests, it’s essential to focus on testing one variable at a time. Whether you’re testing headline variations, button colours, or pricing strategies, limiting the scope of each test ensures clarity and facilitates meaningful insights.

3. Prioritise High-Impact Elements:

While it’s tempting to test every conceivable element on a webpage or app screen, it’s more productive to prioritise high-impact elements that are likely to yield significant improvements in conversion rates. Start with elements that have the most direct influence on user behaviour, such as headlines, calls-to-action, and page layouts.

4. Implement Proper Testing Methodology:

Maintain rigour and consistency in your A/B testing methodology to ensure reliable results. This includes using statistically significant sample sizes, randomising traffic allocation, and adhering to best practices for experimental design. By following established testing protocols, you can trust the validity of your findings and make informed optimisation decisions.

5. Iterate and Iterate:

A/B testing is not a one-time endeavour but a continuous cycle of experimentation and optimisation. Embrace a culture of iteration within your organisation, where learnings from each test inform subsequent iterations and improvements. By consistently refining your approach based on data-driven insights, you can unlock the full potential of A/B testing to drive conversions.

Common Mistakes to Avoid:

Steering clear of common mistakes is vital for the success of any A/B testing campaign. Here are some crucial pitfalls to avoid:

1. Testing Too Many Variables at Once:

Testing multiple variables simultaneously can muddy the results, making it challenging to determine which changes drove the observed differences in performance. Focus on testing one variable at a time to isolate its impact accurately.

2. Ignoring Statistical Significance:

Drawing conclusions from A/B tests without achieving statistical significance can lead to erroneous decisions. Ensure that your sample size is sufficient to detect meaningful differences between variations and validate results accordingly.

3. Not Defining Clear Objectives:

Failing to establish clear objectives and key performance indicators (KPIs) can undermine the effectiveness of A/B testing efforts. Clearly define what you aim to achieve with each test and how you’ll measure success to guide experimentation and analysis.

4. Overlooking Segmentation:

Neglecting to segment your audience appropriately can obscure valuable insights and limit the relevance of your test results. Consider factors such as demographics, user behaviour, or traffic sources when designing experiments to ensure meaningful comparisons.

5. Confirmation Bias:

Succumbing to confirmation bias, where you interpret results to confirm preconceived notions or preferences, can skew your interpretation of A/B test outcomes. Approach experimentation with an open mind and let data guide your decisions, even if it challenges assumptions.

6. Stopping Tests Prematurely:

Ending tests prematurely, either due to impatience or prematurely declaring a winner based on early results, can lead to inaccurate conclusions. Allow tests to run for a sufficient duration to collect robust data and achieve statistical significance before drawing conclusions.

7. Ignoring User Feedback:

Disregarding qualitative feedback from users or relying solely on quantitative metrics can overlook valuable insights into user preferences and behaviour. Incorporate user feedback and insights into your testing strategy to complement quantitative analysis effectively.

8. Not Documenting Learnings:

Failing to document learnings and insights from A/B tests can hinder knowledge sharing and future optimisation efforts. Maintain detailed records of experiment designs, results, and conclusions to inform future testing iterations and organisational learning.

By avoiding these common mistakes and adhering to best practices, marketers can maximise the effectiveness of their A/B testing campaigns and drive meaningful improvements in conversion rates and user experiences.